|

KWSN Orbiting Fortress

KWSN Distributed Computing Teams forum

|

| View previous topic :: View next topic |

| Author |

Message |

branjo

Prince

Joined: 05 Jan 2006

Posts: 746

Location: Slovakia

|

Posted: Wed Feb 26, 2014 4:46 pm Post subject: Posted: Wed Feb 26, 2014 4:46 pm Post subject: |

|

|

| Assimilator1 wrote: | ...

branjo

Re mean & medium, agreed median is more useful generally. But for MW the WUs times don't fluctuate much (bar the long & short WU differences) if GPU load is nr. 100% & the GPU has its own cpu core free, so mean & median average should give nearly identical times.

Btw the big difference in WU times of 213.76 credit WUs I had already pre-warned you about in my benchmark requirements  . Since the ~20th Feb MW have released 2 different speed 213.76 credit WUs, as I said I only wanted the times for the 'long' ones . Since the ~20th Feb MW have released 2 different speed 213.76 credit WUs, as I said I only wanted the times for the 'long' ones  . .

Btw2, where did you get the Titan times from? I don't see that rig listed in your computers. Which it would be if someone were crunching for you.

I looked at computers beyond 30 days too........

... |

- Some people on MW message board calculated their averages from "fastest times" (e.g. here http://milkyway.cs.rpi.edu/milkyway/forum_thread.php?id=3465&postid=61233#61233 ).

- I calculated only long, 213.76 WU's (both "short" 700's and "long" 1 100's, but all were/are 213.76), there are also shoooooooort, 106.88 ones taking ca. 360 secs per http://milkyway.cs.rpi.edu/milkyway/results.php?userid=398806&offset=20&show_names=0&state=0&appid=10 Probably the language barrier

- Titan's results are from one of my wingmen - bcavnaugh: http://milkyway.cs.rpi.edu/milkyway/show_host_detail.php?hostid=561866

I cant imagine why we were rivals in the far-far-far past when I have not been kaNI!ght yet

Cheers

ETA: will test CPU after WCG finish its current Beta

ETA2: using app_config is more than desirable

_________________

|

|

| Back to top |

|

|

Assimilator1

Knight

Joined: 23 Aug 2002

Posts: 40

Location: UK, Surrey, Guildford

|

Posted: Wed Feb 26, 2014 6:30 pm Post subject: Posted: Wed Feb 26, 2014 6:30 pm Post subject: |

|

|

| branjo wrote: | | Assimilator1 wrote: | ...

branjo

Re mean & medium, agreed median is more useful generally. But for MW the WUs times don't fluctuate much (bar the long & short WU differences) if GPU load is nr. 100% & the GPU has its own cpu core free, so mean & median average should give nearly identical times.

Btw the big difference in WU times of 213.76 credit WUs I had already pre-warned you about in my benchmark requirements  . Since the ~20th Feb MW have released 2 different speed 213.76 credit WUs, as I said I only wanted the times for the 'long' ones . Since the ~20th Feb MW have released 2 different speed 213.76 credit WUs, as I said I only wanted the times for the 'long' ones  . .

Btw2, where did you get the Titan times from? I don't see that rig listed in your computers. Which it would be if someone were crunching for you.

I looked at computers beyond 30 days too........

... |

- Some people on MW message board calculated their averages from "fastest times" (e.g. here http://milkyway.cs.rpi.edu/milkyway/forum_thread.php?id=3465&postid=61233#61233 ).

|

Yea but I haven't used his time yet, I believed at the time he'd be able to get his GPU util up, however that doesn't look possible with NVidia GPUs unfortunately, at least with some WUs.

I might yet be able to get a time from him if the WUs he does get 99% load which happen to be the right ones (see this post by him http://milkyway.cs.rpi.edu/milkyway/forum_thread.php?id=3465&postid=61239 ).

Re your titan man, ahhh ok, I see he's crunching for another team, I thought you said he was crunching for you for a bit  , oh well nm , oh well nm  . .

I see what you mean by the times, they seem to fluctuate quite a bit from 350-380s.

Yankton

Would appreciate that

_________________

Team AnandTech - SETI, F@H, Muon1, MW@H, Asteroids@H, LHC@H, Skynet, Rosetta@H

Last edited by Assimilator1 on Fri Mar 07, 2014 2:17 pm; edited 1 time in total |

|

| Back to top |

|

|

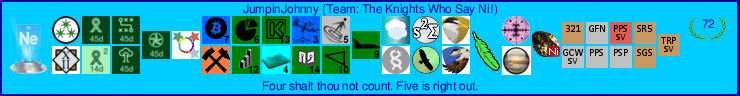

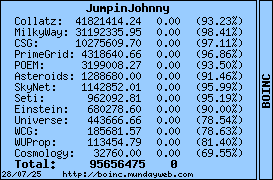

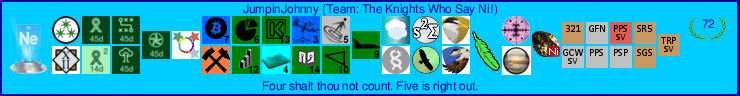

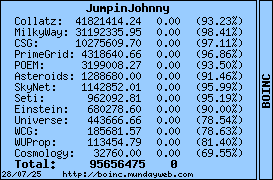

JumpinJohnny

Prince

Joined: 28 Mar 2013

Posts: 1247

Location: Western New Hamster

|

Posted: Wed Feb 26, 2014 9:49 pm Post subject: Posted: Wed Feb 26, 2014 9:49 pm Post subject: |

|

|

| Assimilator1 wrote: | Thx for your results JJ

Your 4870 times make me think that my 4870's GPU load wasn't as near 100% as I thought.....

Btw why did you use mean & median average for the 2 different WUs?

Anyway, 444s looks right to me too.

Are you going to give some CPU times too? |

I used the more "accurate" average on the longer WUs partly because that was the number you were looking for specifically, and while some of the shorter WU's (213.76) were running I was doing other things on the computer, so needed to exclude a bunch of them from the mix and also some of them looked suspiciously low.

I consider the "larger" WU's average (443.77 sec.) to be a pretty accurate test of the 4870 in my rig while running the GPU fairly 'hot' but at stock speeds.

For the CPU > AMD Phenom II X4 965 @ 3411 Mhz,, the longer Milkyway@Home Separation (Modified Fit) v1.28 are running an average of 13,774. seconds. The shorter ones are shrubbing at around 6,900.

_________________

|

|

| Back to top |

|

|

Yankton

Prince

Joined: 27 Sep 2008

Posts: 1702

Location: California

|

Posted: Thu Feb 27, 2014 7:43 am Post subject: Posted: Thu Feb 27, 2014 7:43 am Post subject: |

|

|

677338523 506693807 547725 27 Feb 2014, 4:19:55 UTC 27 Feb 2014, 5:57:27 UTC Completed and validated 413.86 4.48 213.76 Milkyway@Home Separation (Modified Fit) v1.28 (opencl_nvidia)

677338522 506693806 547725 27 Feb 2014, 4:19:55 UTC 27 Feb 2014, 6:52:44 UTC Completed and validated 413.70 4.71 213.76 Milkyway@Home Separation (Modified Fit) v1.28 (opencl_nvidia)

677338521 506693805 547725 27 Feb 2014, 4:19:55 UTC 27 Feb 2014, 6:04:20 UTC Completed and validated 413.67 5.21 213.76 Milkyway@Home Separation (Modified Fit) v1.28 (opencl_nvidia)

677338520 506693804 547725 27 Feb 2014, 4:19:55 UTC 27 Feb 2014, 6:25:07 UTC Completed and validated 413.27 4.80 213.76 Milkyway@Home Separation (Modified Fit) v1.28 (opencl_nvidia)

677338519 506693803 547725 27 Feb 2014, 4:19:55 UTC 27 Feb 2014, 6:45:56 UTC Completed and validated 413.40 4.56 213.76 Milkyway@Home Separation (Modified Fit) v1.28 (opencl_nvidia)

677338518 506693802 547725 27 Feb 2014, 4:19:55 UTC 27 Feb 2014, 6:38:56 UTC Completed and validated 414.34 5.19 213.76 Milkyway@Home Separation (Modified Fit) v1.28 (opencl_nvidia)

677338517 506693801 547725 27 Feb 2014, 4:19:55 UTC 27 Feb 2014, 6:18:13 UTC Completed and validated 413.68 5.05 213.76 Milkyway@Home Separation (Modified Fit) v1.28 (opencl_nvidia)

677338516 506693800 547725 27 Feb 2014, 4:19:55 UTC 27 Feb 2014, 6:11:15 UTC Completed and validated 414.13 4.84 213.76 Milkyway@Home Separation (Modified Fit) v1.28 (opencl_nvidia)

677338515 506693799 547725 27 Feb 2014, 4:19:55 UTC 27 Feb 2014, 6:32:00 UTC Completed and validated 413.51 4.74 213.76 Milkyway@Home Separation (Modified Fit) v1.28 (opencl_nvidia)

677337914 506693355 547725 27 Feb 2014, 4:18:46 UTC 27 Feb 2014, 5:08:56 UTC Completed and validated 181.36 3.63 106.88 Milkyway@Home Separation (Modified Fit) v1.28 (opencl_nvidia)

677337911 506693352 547725 27 Feb 2014, 4:18:46 UTC 27 Feb 2014, 5:43:35 UTC Completed and validated 413.82 4.88 213.76 Milkyway@Home Separation (Modified Fit) v1.28 (opencl_nvidia)

677337910 506693351 547725 27 Feb 2014, 4:18:46 UTC 27 Feb 2014, 5:36:42 UTC Completed and validated 413.26 4.43 213.76 Milkyway@Home Separation (Modified Fit) v1.28 (opencl_nvidia)

677337908 506693349 547725 27 Feb 2014, 4:18:46 UTC 27 Feb 2014, 5:50:31 UTC Completed and validated 413.88 4.68 213.76 Milkyway@Home Separation (Modified Fit) v1.28 (opencl_nvidia)

677337907 506693348 547725 27 Feb 2014, 4:18:46 UTC 27 Feb 2014, 5:15:58 UTC Completed and validated 419.63 5.62 213.76 Milkyway@Home Separation (Modified Fit) v1.28 (opencl_nvidia)

677337906 506693347 547725 27 Feb 2014, 4:18:46 UTC 27 Feb 2014, 5:23:03 UTC Completed and validated 416.56 4.62 213.76 Milkyway@Home Separation (Modified Fit) v1.28 (opencl_nvidia)

677337896 506693337 547725 27 Feb 2014, 4:18:46 UTC 27 Feb 2014, 5:29:46 UTC Completed and validated 413.79 4.71 213.76 Milkyway@Home Separation (Modified Fit) v1.28 (opencl_nvidia)

677337249 506692834 547725 27 Feb 2014, 4:17:40 UTC 27 Feb 2014, 4:49:28 UTC Completed and validated 354.64 4.60 213.76 Milkyway@Home Separation (Modified Fit) v1.28 (opencl_nvidia)

677337248 506692833 547725 27 Feb 2014, 4:17:40 UTC 27 Feb 2014, 4:40:06 UTC Completed and validated 351.66 4.10 213.76 Milkyway@Home Separation (Modified Fit) v1.28 (opencl_nvidia)

677337247 506692832 547725 27 Feb 2014, 4:17:40 UTC 27 Feb 2014, 4:23:40 UTC Completed and validated 352.85 4.23 213.76 Milkyway@Home Separation (Modified Fit) v1.28 (opencl_nvidia)

677337246 506692831 547725 27 Feb 2014, 4:17:40 UTC 27 Feb 2014, 5:05:57 UTC Completed and validated 351.63 3.87 213.76 Milkyway@Home Separation (Modified Fit) v1.28 (opencl_nvidia)

I don't think the app was written with these cards in mind, it pretty much demolishes on every other project. These are all done in DP mode with a core complete free. Uses very little cpu. I didn't check load while they were running. The forum says some people are running 6 concurrently on a titan and still have them take six minutes a pop.

_________________

Some days are worse than others. |

|

| Back to top |

|

|

Assimilator1

Knight

Joined: 23 Aug 2002

Posts: 40

Location: UK, Surrey, Guildford

|

Posted: Thu Feb 27, 2014 1:00 pm Post subject: Posted: Thu Feb 27, 2014 1:00 pm Post subject: |

|

|

JumpinJohnny

Thx for the CPU time, & yea fair enough re averages  . .

Yankton

Yea I've heard (earlier in this thread?) that Open CL is poorly optimised for NVidia. It's a shame because the Titans DP power is immense! About 50% more than the best AMD retail card! (7970 GE), & that's not including the Black version.

Anyway it look like your average for long 213.76 WUs is ~414s, before I post that time can you see what your GPU load is on 1 of those WUs?

Oh also, have you enabled native DP in the NV ctrl panel? (see previous page).

_________________

Team AnandTech - SETI, F@H, Muon1, MW@H, Asteroids@H, LHC@H, Skynet, Rosetta@H |

|

| Back to top |

|

|

Yankton

Prince

Joined: 27 Sep 2008

Posts: 1702

Location: California

|

Posted: Thu Feb 27, 2014 2:42 pm Post subject: Posted: Thu Feb 27, 2014 2:42 pm Post subject: |

|

|

Per GPU-z that is at 18% load. Yes it is DP mode. OpenCL works great for this card elsewhere, I think the problem is more with the app for this project. The card is quite amazing!

_________________

Some days are worse than others. |

|

| Back to top |

|

|

Assimilator1

Knight

Joined: 23 Aug 2002

Posts: 40

Location: UK, Surrey, Guildford

|

Posted: Thu Feb 27, 2014 3:21 pm Post subject: Posted: Thu Feb 27, 2014 3:21 pm Post subject: |

|

|

Only 18% load? Good god no wonder!

Pity MW doesn't make good use of it

Re DP, err so is native DP mode enabled then?

_________________

Team AnandTech - SETI, F@H, Muon1, MW@H, Asteroids@H, LHC@H, Skynet, Rosetta@H |

|

| Back to top |

|

|

Yankton

Prince

Joined: 27 Sep 2008

Posts: 1702

Location: California

|

Posted: Thu Feb 27, 2014 4:15 pm Post subject: Posted: Thu Feb 27, 2014 4:15 pm Post subject: |

|

|

Yes, it is enabled. Through the control panel. All good.

_________________

Some days are worse than others. |

|

| Back to top |

|

|

branjo

Prince

Joined: 05 Jan 2006

Posts: 746

Location: Slovakia

|

Posted: Thu Feb 27, 2014 4:39 pm Post subject: Posted: Thu Feb 27, 2014 4:39 pm Post subject: |

|

|

Yankton, if you try, let say, 6 concurrent GPU tasks on 1 CPU thread using app_config would the shrubbing times remain the same?

I mean:

| Code: | <app_config>

<app>

<name>milkyway_separation_modified_fit</name>

<gpu_versions>

<gpu_usage>0.166666</gpu_usage>

<cpu_usage>0.166666</cpu_usage>

</gpu_versions>

</app>

</app_config> |

_________________

|

|

| Back to top |

|

|

Yankton

Prince

Joined: 27 Sep 2008

Posts: 1702

Location: California

|

Posted: Thu Feb 27, 2014 5:36 pm Post subject: Posted: Thu Feb 27, 2014 5:36 pm Post subject: |

|

|

There's a couple of threads in the milky way forum stating they run 6 on that card with no drop in time. The mod fit uses almost no cpu so 1 thread should be plenty for six instances. I'll give it whirl later and see what happens.

_________________

Some days are worse than others. |

|

| Back to top |

|

|

Assimilator1

Knight

Joined: 23 Aug 2002

Posts: 40

Location: UK, Surrey, Guildford

|

Posted: Fri Feb 28, 2014 3:14 pm Post subject: Posted: Fri Feb 28, 2014 3:14 pm Post subject: |

|

|

6 at time with the same time would be some pretty awesome output  , equals 60s/WU then! , equals 60s/WU then!

(about to update table, added a GTX 770 & another HD 5850 time)

_________________

Team AnandTech - SETI, F@H, Muon1, MW@H, Asteroids@H, LHC@H, Skynet, Rosetta@H |

|

| Back to top |

|

|

Yankton

Prince

Joined: 27 Sep 2008

Posts: 1702

Location: California

|

Posted: Sun Mar 02, 2014 4:51 pm Post subject: Posted: Sun Mar 02, 2014 4:51 pm Post subject: |

|

|

Running 5 simultaneously. 18 * 5 = 90. Plus overhead for multiple tasks. 5 tasks put it at 97% load. Tasks might be bigger now and the app is relatively new, it might be more efficient than when the people doing 6 - 7 of them. Tasks with a longer name (the modbpls) took a bit longer to run than the other tasks. Watching GPU-Z the modbpls also used more of the card, about 26% a pop. Since you can't select what you get I will set mine to run 4 at a time in the future in case it stocks up on the modbpls. Some of the times below may be slightly longer than they would if running only 4 due to slight overloading. Will post times later after some validation happens.

_________________

Some days are worse than others. |

|

| Back to top |

|

|

Yankton

Prince

Joined: 27 Sep 2008

Posts: 1702

Location: California

|

Posted: Sun Mar 02, 2014 10:10 pm Post subject: Posted: Sun Mar 02, 2014 10:10 pm Post subject: |

|

|

680134843 508294775 547725 2 Mar 2014, 21:30:37 UTC 2 Mar 2014, 21:37:06 UTC Completed and validated 379.49 32.06 213.76 Milkyway@Home Separation (Modified Fit) v1.28 (opencl_nvidia)

680134842 508294773 547725 2 Mar 2014, 21:30:37 UTC 2 Mar 2014, 21:49:23 UTC Completed and validated 357.06 9.52 213.76 Milkyway@Home Separation (Modified Fit) v1.28 (opencl_nvidia)

680134841 508294770 547725 2 Mar 2014, 21:30:37 UTC 2 Mar 2014, 21:37:06 UTC Completed and validated 380.49 31.70 213.76 Milkyway@Home Separation (Modified Fit) v1.28 (opencl_nvidia)

680134840 508294764 547725 2 Mar 2014, 21:30:37 UTC 2 Mar 2014, 21:43:26 UTC Completed and validated 382.02 34.13 213.76 Milkyway@Home Separation (Modified Fit) v1.28 (opencl_nvidia)

680134838 508268962 547725 2 Mar 2014, 21:30:37 UTC 2 Mar 2014, 21:49:37 UTC Completed and validated 356.93 9.86 213.76 Milkyway@Home Separation (Modified Fit) v1.28 (opencl_nvidia)

680134837 508241942 547725 2 Mar 2014, 21:30:37 UTC 2 Mar 2014, 21:45:42 UTC Completed and validated 377.20 29.72 213.76 Milkyway@Home Separation (Modified Fit) v1.28 (opencl_nvidia)

680134836 508198420 547725 2 Mar 2014, 21:30:37 UTC 2 Mar 2014, 21:37:06 UTC Completed and validated 379.49 32.04 213.76 Milkyway@Home Separation (Modified Fit) v1.28 (opencl_nvidia)

680134835 508190353 547725 2 Mar 2014, 21:30:37 UTC 2 Mar 2014, 21:45:42 UTC Completed and validated 464.58 54.71 213.76 Milkyway@Home Separation (Modified Fit) v1.28 (opencl_nvidia)

680134831 508057320 547725 2 Mar 2014, 21:30:37 UTC 2 Mar 2014, 21:38:34 UTC Completed and validated 466.87 55.08 213.76 Milkyway@Home Separation (Modified Fit) v1.28 (opencl_nvidia)

680134830 507827219 547725 2 Mar 2014, 21:30:37 UTC 2 Mar 2014, 21:44:34 UTC Completed and validated 381.88 34.13 213.76 Milkyway@Home Separation (Modified Fit) v1.28 (opencl_nvidia)

680134829 507738891 547725 2 Mar 2014, 21:30:37 UTC 2 Mar 2014, 21:46:50 UTC Completed and validated 452.42 43.99 213.76 Milkyway@Home Separation (Modified Fit) v1.28 (opencl_nvidia)

680134828 507692832 547725 2 Mar 2014, 21:30:37 UTC 2 Mar 2014, 21:38:34 UTC Completed and validated 466.87 56.89 213.76 Milkyway@Home Separation (Modified Fit) v1.28 (opencl_nvidia)

Times are slightly longer. Likely due to higher usage of certain shrubs. I'll leave it at 4 simultaneous for now. Should work slightly better than 5 did.

_________________

Some days are worse than others. |

|

| Back to top |

|

|

Assimilator1

Knight

Joined: 23 Aug 2002

Posts: 40

Location: UK, Surrey, Guildford

|

Posted: Thu Mar 06, 2014 2:26 pm Post subject: Posted: Thu Mar 06, 2014 2:26 pm Post subject: |

|

|

Thanks for all the results  . .

I'm not sure what to post to the table atm, putting in the single WU time where it is hugely under-utilised seems unfair, but also I had intended to avoid multiple WU times as they vary far more, made even worse by the mix of long & short 213.76 WUs we get now!. However the multiple WU time would be the only 1 fair to the Titan!

It's a pity you can't force the client to only crunch long 213.76 WUs, at least the average of that would give a broad idea of its performance.

**********************************************************

No other results folks?

_________________

Team AnandTech - SETI, F@H, Muon1, MW@H, Asteroids@H, LHC@H, Skynet, Rosetta@H |

|

| Back to top |

|

|

Assimilator1

Knight

Joined: 23 Aug 2002

Posts: 40

Location: UK, Surrey, Guildford

|

Posted: Fri Mar 07, 2014 2:29 pm Post subject: Posted: Fri Mar 07, 2014 2:29 pm Post subject: |

|

|

Yankton

Are those last times you posted for running 5x WUs at once?

Got the 4x times yet?

Btw I have posted the single WU time with a foot note about GPU load.

I may post your concurrent WU times range as another footnote at the base of the table.

Have you tried increasing CPU time via an app_info to improve single GPU WU load?

_________________

Team AnandTech - SETI, F@H, Muon1, MW@H, Asteroids@H, LHC@H, Skynet, Rosetta@H

Last edited by Assimilator1 on Fri Mar 07, 2014 2:43 pm; edited 1 time in total |

|

| Back to top |

|

|

Yankton

Prince

Joined: 27 Sep 2008

Posts: 1702

Location: California

|

Posted: Fri Mar 07, 2014 2:40 pm Post subject: Posted: Fri Mar 07, 2014 2:40 pm Post subject: |

|

|

That was with 5, which is why they are a bit longer than they should. With those other units load was maxed out. I can run a couple sets of 4 if you want. Should run 4 with slowing if they are all the longer units. 18% load for the short ones. 26% for the long ones.

_________________

Some days are worse than others. |

|

| Back to top |

|

|

Assimilator1

Knight

Joined: 23 Aug 2002

Posts: 40

Location: UK, Surrey, Guildford

|

Posted: Fri Mar 07, 2014 2:45 pm Post subject: Posted: Fri Mar 07, 2014 2:45 pm Post subject: |

|

|

Lol, just edited my previous post the same time you posted

Yea some x4 WU times would be good thx  . .

_________________

Team AnandTech - SETI, F@H, Muon1, MW@H, Asteroids@H, LHC@H, Skynet, Rosetta@H |

|

| Back to top |

|

|

Yankton

Prince

Joined: 27 Sep 2008

Posts: 1702

Location: California

|

Posted: Fri Mar 07, 2014 2:49 pm Post subject: Posted: Fri Mar 07, 2014 2:49 pm Post subject: |

|

|

Alrighty. At work at the moment, I'll see if I can pull some tonight. The app_info doesn't affect cpu time - just gives you the listed info in your wu description in the boinc manager. It uses what it uses. You can change the gpu usage though to change how many units it will do at a time. Both types of units had about 1/10th cpu time vs. gpu time so a single core should still be plenty. I'll watch the task manager and see what it gives me though.

_________________

Some days are worse than others. |

|

| Back to top |

|

|

Assimilator1

Knight

Joined: 23 Aug 2002

Posts: 40

Location: UK, Surrey, Guildford

|

Posted: Fri Mar 07, 2014 3:31 pm Post subject: Posted: Fri Mar 07, 2014 3:31 pm Post subject: |

|

|

The app_info doesn't affect cpu time

Apparently there is an option to change that, but I don't know much about these apps so I can't help you atm I'm afraid.

I'll try to find out.......

Ok I was slightly off ,lol. :-

Quote:

Originally Posted by Assimilator1

Btw Yankton doesn't think the cpu time can be altered in the app info, where would he find it?

Posted by GleeM

He might be right.

I think it is in app_config.xml that the cpu time can be set. In the example below you could change the <cpu_usage> from 0.02 to something higher like 0.50.

<app_config>

<app>

<name>milkyway</name>

<gpu_versions>

<gpu_usage>1.0</gpu_usage>

<cpu_usage>0.02</cpu_usage>

</gpu_versions>

</app>

</app_config>

From here http://forums.anandtech.com/showthread.php?p=36140058

Don't know if it will help, but probably worth a quick go?

_________________

Team AnandTech - SETI, F@H, Muon1, MW@H, Asteroids@H, LHC@H, Skynet, Rosetta@H |

|

| Back to top |

|

|

Yankton

Prince

Joined: 27 Sep 2008

Posts: 1702

Location: California

|

Posted: Fri Mar 07, 2014 7:05 pm Post subject: Posted: Fri Mar 07, 2014 7:05 pm Post subject: |

|

|

https://boinc.berkeley.edu/dev/forum_thread.php?id=8388

The tags you're thinking of are only used by the boinc scheduler. It won't actually chage what the gpu app wants to use of the cpu. What it does do is possibly knock another core away from cpu apps.

Say I have <cpu_usage>0.5</cpu_usage> and <gpu_usage>0.33</gpu_usage>

This would run 3 instances of the app on the gpu. BUT, since this info tells the scheduler it wants .5 cores per instance then it will remove 2 cores (1.5 rounded up) from cpu app availablility. What each instance actually uses could be extremely low (the 0.02 from your example) which means you have one core running at 6% load to feed your gpu for thoses three instances but a second core sitting idle because the app_config told the scheduler to reserve 50% of a core three times over. If you have multiple gpus running multiple instances of certain apps you might want to figure out what one instance uses and set that in the app_config / app_info so that the scheduler correctly reserves what you need.

So, short form, it's what you reserve for use vs. what you actually use. Does that make sense? I tend to ramble sometimes.

_________________

Some days are worse than others. |

|

| Back to top |

|

|

|

|

You cannot post new topics in this forum

You cannot reply to topics in this forum

You cannot edit your posts in this forum

You cannot delete your posts in this forum

You cannot vote in polls in this forum

|

|