|

KWSN Orbiting Fortress

KWSN Distributed Computing Teams forum

|

| View previous topic :: View next topic |

| Author |

Message |

JerWA

Prince

Joined: 01 Jan 2007

Posts: 1497

Location: WA, USA

|

Posted: Wed Sep 23, 2009 1:21 pm Post subject: Collatz on some diff ATI cards Posted: Wed Sep 23, 2009 1:21 pm Post subject: Collatz on some diff ATI cards |

|

|

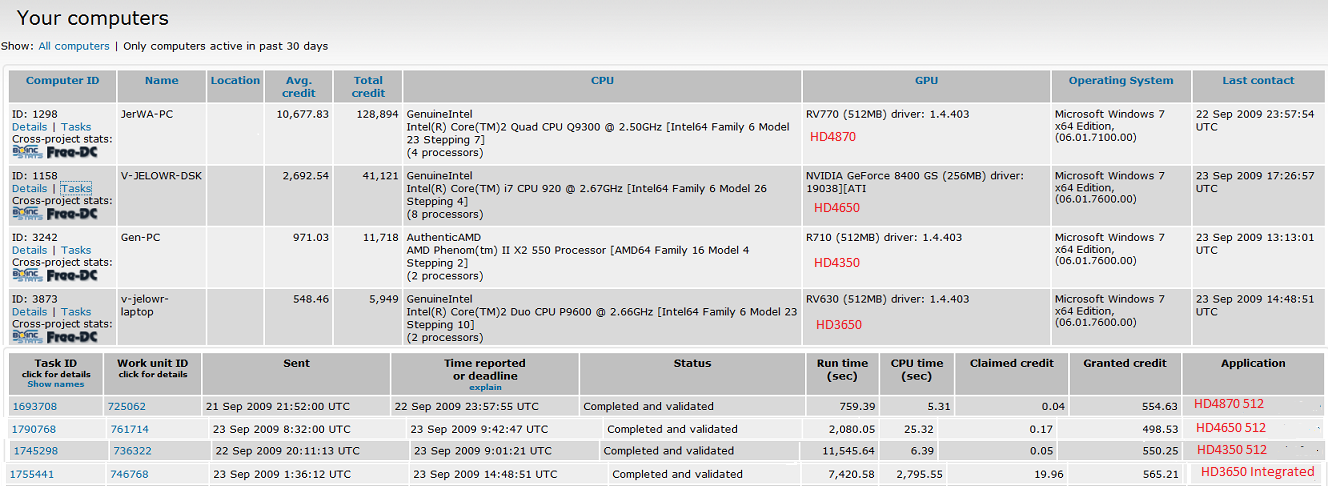

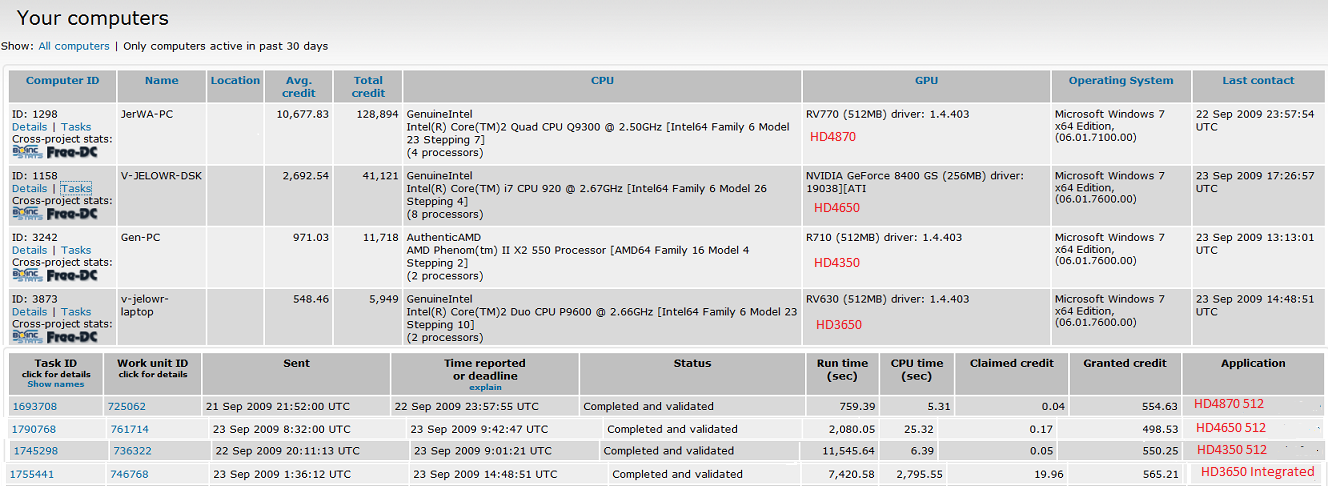

Put this together because of a convo going in another thread, figured it should be split out.

Only the HD4870 is double precision (which is also why it's back on MW hehe). The rest are all single procession "low cost" cards. They haven't been on the project long, as you can tell from the low averages still (tons and tons of WU waiting for validation), but you can extrapolate out from the info.

For those too lazy to do the math lol:

HD4870 = 0.73 credits/second @ 0.7% CPU time. ~63,072/day.

HD4650 = 0.24 credits/second @ 1.1% CPU time. ~20,736/day.

HD4350 = 0.05 credits/second @ 0.5% CPU time. ~4,320/day.

HD3650 = 0.07 credits/second @ 37.7% CPU Time (ouch). ~6,048/day.

Certainly worth it, even on the $25 HD4350. On the integrated chipset it's debatable because it will have a very noticeable impact on CPU projects whereas the full cards barely touch the CPU.

Feel free to post your own results, I'm curious

PS: The 8400 GS is for quad monitor, it's not doing any crunching, just happens to be first in the device list reported by BOINC so you don't see the ATI info.

PS again: All of these systems are running the same OS (except my home system which is still on RC, I'll fix it eventually), the same BOINC manager (6.10.3), the same ATI 0.2 app, the same 9.9 catalyst drivers, etc.

_________________

Stats: [BOINC Synergy] - [Free-DC] - [MundayWeb] - [Netsoft] - [All Project Stats] |

|

| Back to top |

|

|

Nuadormrac

Prince

Joined: 13 Sep 2009

Posts: 506

|

Posted: Wed Sep 23, 2009 3:28 pm Post subject: Posted: Wed Sep 23, 2009 3:28 pm Post subject: |

|

|

Well it seems it doesn't like a Radeon 9600. It just errors out saying

| Quote: | 9/23/2009 4:23:44 PM Collatz Conjecture Sending scheduler request: To fetch work.

9/23/2009 4:23:44 PM Collatz Conjecture Requesting new tasks

9/23/2009 4:23:49 PM Collatz Conjecture Scheduler request completed: got 0 new tasks

9/23/2009 4:23:49 PM Collatz Conjecture Message from server: No work sent

9/23/2009 4:23:49 PM Collatz Conjecture Message from server: Your computer has no ATI GPU

9/23/2009 4:23:49 PM Collatz Conjecture Message from server: Your computer has no NVIDIA GPU |

That's not really true, a 9600 has a GPU, but perhaps not with what it's looking for? But then that's strange because someone did post about a 9600 in their forums. This said, I went through, renamed the copies of the .dll files as it suggested in another thread (driver version is 9.3), rebooted even, and it wouldn't play. I'm not sure if it will go after further browsing as the card has 128 MB of video RAM. It quite litterally was bought in 2004 (though more like December 2004).

_________________

. |

|

| Back to top |

|

|

JerWA

Prince

Joined: 01 Jan 2007

Posts: 1497

Location: WA, USA

|

|

| Back to top |

|

|

Nuadormrac

Prince

Joined: 13 Sep 2009

Posts: 506

|

Posted: Wed Sep 23, 2009 4:04 pm Post subject: Posted: Wed Sep 23, 2009 4:04 pm Post subject: |

|

|

Nah, haven't been able to afford upgrades in years. To make a long story short, I was in college in 2006 as a full time student when my father had a stroke. The finances went to pot, and I had to for all intents and purposes drop out of school and begin looking for work. I didn't exactly have the money to afford the gasoline to get to class, and well...

Things went from bad to worse, and the US economy (as well as the economy in NM where I lived then) just kept getting worse. For new entrants, things are beyond ridiculous. I moved a year ago, with a part time job position in wait for me; which I got layed off from after I returned from eye surgery. I've been unemployed again since March (following 2 months of medical leave), with no end in site. The job markets are rather impossible atm with this (they don't want to call it as such; but realistically deep recession or depression we're going through around these parts). Upgrading hardware doesn't exactly take precedence, when trying to pay bills is beyond difficult...

_________________

. |

|

| Back to top |

|

|

KWSN imcrazynow

Prince

Joined: 15 May 2005

Posts: 2586

Location: Behind you !!

|

Posted: Wed Sep 23, 2009 6:01 pm Post subject: Posted: Wed Sep 23, 2009 6:01 pm Post subject: |

|

|

Do you think Collatz would run on a GeForce 8500GT? I have one sitting on a shelf practically new and a computer I could easily put it in. It might be worth a shot for a few more credits.

Yes, I am a Ho!

_________________

And a few that won't update for some reason.

4870 GPU 4870 GPU |

|

| Back to top |

|

|

Sir Papa Smurph

Cries like a little girl

Joined: 18 Jul 2006

Posts: 4430

Location: Michigan

|

Posted: Wed Sep 23, 2009 7:23 pm Post subject: Posted: Wed Sep 23, 2009 7:23 pm Post subject: |

|

|

Dunno about the 8500 but a Geforce 8600 GT will run a GpuGrid Wu in about 4 days....

_________________

a.k.a. Licentious of Borg.........Resistance Really is Futile.......

and a Really Hoopy Frood who always knows where his Towel is...

|

|

| Back to top |

|

|

LanDroid

Prince

Joined: 11 Jun 2002

Posts: 4477

Location: Cincinnati, OH U.S.

|

Posted: Wed Sep 23, 2009 9:43 pm Post subject: Posted: Wed Sep 23, 2009 9:43 pm Post subject: |

|

|

Based on the following specs the HD5850 @ around $250. seems like a good compromise compared to the HD5870 @ $400. Not sure I'd spend that much on either one, but 90K per day is rather tempting.  Are these "double precision"? Are these "double precision"?

http://www.theinquirer.net/inquirer/news/1556090/amd-launches-radeon-hd-5850-5870

So for GPU newbies what's the deal with CUDA, Nvidia, etc. and how do they compare to the above? How do stream processors compare to cores, does this mean the HD5870 can process 1600 WU's at the same time?

Which projects can be crunched by GPU's? It would have to be a project that actually interests me, I'm not a total stat ho...

If I install just one, will it kill my power supply?

Oh yeah, how noisy are they? I see a fan on some of these... My comp is in my bedroom so....it can't sound like a hairdryer... |

|

| Back to top |

|

|

JerWA

Prince

Joined: 01 Jan 2007

Posts: 1497

Location: WA, USA

|

Posted: Thu Sep 24, 2009 12:43 am Post subject: Posted: Thu Sep 24, 2009 12:43 am Post subject: |

|

|

| LanDroid wrote: | Based on the following specs the HD5850 @ around $250. seems like a good compromise compared to the HD5870 @ $400. Not sure I'd spend that much on either one, but 90K per day is rather tempting.  Are these "double precision"? Are these "double precision"?

http://www.theinquirer.net/inquirer/news/1556090/amd-launches-radeon-hd-5850-5870

So for GPU newbies what's the deal with CUDA, Nvidia, etc. and how do they compare to the above? How do stream processors compare to cores, does this mean the HD5870 can process 1600 WU's at the same time?

Which projects can be crunched by GPU's? It would have to be a project that actually interests me, I'm not a total stat ho...

If I install just one, will it kill my power supply?

Oh yeah, how noisy are they? I see a fan on some of these... My comp is in my bedroom so....it can't sound like a hairdryer... |

Ok, let's see...

GPU computing is a relatively new thing. It's only fairly recently that any thought was given to the fact that GPUs could do some work that CPUs have always done.

Nvidia and ATI both started with their own standards for how you write programs to run on the GPU. Nvidia uses CUDA, ATI uses Brook and CAL, and calls the whole process Stream. They aren't interchangeable, so a program has to be written for one or the other. That's actually going to change soonish, because ATI is starting to push an open standard that DX11 will support, which means in the next year or two we should start seeing applications that will run on either platform (as long as they support DX11, meaning extremely new cards since only the HD5870/5850 support it right now).

It's hard to explain GPU processing in CPU terms. The easiest way to think of it is that a GPU, regardless of brand, is a collection of small parallel processors, rather than one giant processor. A whole bunch of pentium 4s instead of a single i7 for instance. They're designed to do a whole bunch of tasks at the same time, tasks that start big and are broken down into very small pieces. Cores/Stream processors/etc are essentially useless numbers except to compare similar cards. I.e. the HD4870 has 800 stream processors, and the HD5850 has 1400. If they both run at roughly the same speed (and they do, 800 vs 725) then you can guesstimate fairly well how much more work the HD5850 will do compared to the 4870.

As for how many WUs run at once that's up to the application. Depending on how it's written you could run 3 (or more) WUs at once on a single card like my 4870, or a single WU that would push the card to its limits. Again, not something that the card determines really, except that SLI/Crossfire on a card (i.e. like the HD4870x2, which is two GPUs on one board) would run 2 WUs at once, even if 1 was designed to max out a card, because it's essentially two cards on one board.

For "double precision" you need to check the Stream SDK I linked above. Generally speaking, enthusiast cards will be double precision, everything else will not, because it's very expensive in terms of processing effort, moreso than the "double" would imply. For instance, the new HD5870, king of the single GPU cards, has these stats:

# Processing power (single precision): 2.72 TeraFLOPS

# Processing power (double precision): 544 GigaFLOPS

As you can see, the effort of maintaining double precision cuts its processing power to just 20% of what it can do when only single precision is required. This precision is related to mathematical accuracy. On an interesting sidenote, projects that use single precision clients, are actually making you do twice the work in most cases, in order to bring accuracy up without actually requiring double precision. They're able to do this because running the same calculations twice in single precision is still less work than running them once in double precision! More info here: http://en.wikipedia.org/wiki/Single_precision http://en.wikipedia.org/wiki/Double_precision_floating-point_format

For LOTS more info on GPGPU in general, see here: http://en.wikipedia.org/wiki/GPGPU

As for blowing up your power supply, that depends on the card, and your computer. If you use reputable review sites like [H]ardOCP and Hardware Canucks ( http://www.hardwarecanucks.com/ ) they will show power usage for the video cards. Generally speaking, you want to avoid any double GPU card without at least an 850+ watt PSU. As for single GPU cards, you can guesstimate how much power they use based on the plugs they require. Those same review places will include noise info as well. In most cases the enthusiast cards will be louder, but none will be too bad if left on auto (if you don't mind the often outrageous temps they will reach on such a setting). There are more expensive models that have been upgraded by the brands to have better cooling, but sometimes that's just a gimick; again, look to the good review sites to see how well they actually cool and how loud they are.

PCIe power connectors come in two flavors, 6 and 8 pin. Here's a 6 pin: http://www.erodovcdn.com/erodov/reviews/guide/pci-e-connector/pci-e-power-connector-2.jpg

The 6 pin connectors are rated up to 75W each, so if a card has one then you know it will (should) never use more than 75 watts. If it has two, then you're looking at up to 150 watts. The 8 pin connectors are rated up to 150 watts each, so if the card has one (or more!) of those, you're gonna need a serious power supply. A fairly common combination is one 6 pin and one 8 pin, which means the card can use up to 225 watts.

I don't have a list of GPU projects, but off the top of my head the projects are:

NVIDIA: GPUGrid, SETI, MilkyWay, Collatz

ATI: MilkyWay, Collatz

I'll throw some more links in here and sort it eventually...

NVIDIA GPUs that support CUDA: http://www.nvidia.com/object/cuda_learn_products.html

Official BOINC GPU page with basic info/how tos: http://boinc.berkeley.edu/gpu.php

Let me know if I missed anything

Oh, and as for prices, keep two things in mind: 1) New stuff is always expensive, 2) MSRP doesn't usually mean anything. There are already HD5870s going for $379, and a few special sales down in the $349 range. For what it's worth, it's competing with a $450 Nvidia GPU fairly well. Unfortunately the reverse is also true due to limited supply, and some places are selling them for as much as $450. The HD5850 is supposed to have some down in the $239 range, and that's more of what I'm shopping for too. I also figure I won't buy until the end of the year anyways (money limitations), so I'll just keep an eye out for sales hehe.

_________________

Stats: [BOINC Synergy] - [Free-DC] - [MundayWeb] - [Netsoft] - [All Project Stats] |

|

| Back to top |

|

|

LanDroid

Prince

Joined: 11 Jun 2002

Posts: 4477

Location: Cincinnati, OH U.S.

|

Posted: Thu Sep 24, 2009 5:39 am Post subject: Posted: Thu Sep 24, 2009 5:39 am Post subject: |

|

|

| Wow, thanks - more info than I bargained for... I'll study up on this... |

|

| Back to top |

|

|

Nuadormrac

Prince

Joined: 13 Sep 2009

Posts: 506

|

Posted: Thu Sep 24, 2009 7:49 am Post subject: Posted: Thu Sep 24, 2009 7:49 am Post subject: |

|

|

One can get into a fair amount of depth, be it on a CPU or a GPU; where for instance on a CPU there can be multiple processing pipelines (think Pentium p5 and latter), and then there's stuff like simultanious multi-threading where a multi-core processor, as well as multiple processor pipes on a single core can be treated as one large CPU. Digital was working on something to this effect, before their engineers got soaked up by both AMD and Intel. It was in part their answer to competing against the Itanium when it was then announced prior to being abandoned.

http://www.realworldtech.com/page.cfm?ArticleID=RWT122600000000

http://www.realworldtech.com/page.cfm?ArticleID=RWT011601000000&p=1

Incidently, the EV8 which was in planning then, fell to DEC's buyout by Compaq, Compaq discontinuing the product line, the engineers who were working on this going to AMD, and Intel getting some as well. Along with the competition it was planned to be pitted against (IA-64) flopping as the market didn't go that route (it went x86-64 with AMD), and Intel's planned clock rate increases met competition they couldn't beat, in the form of the laws of physics  They just couldn't continue shrinking the die size, to make a continued doubling of clock rate (at which point if they could have we'd probably have 16 GHz - 32 GHz processors now) possible. They just couldn't continue shrinking the die size, to make a continued doubling of clock rate (at which point if they could have we'd probably have 16 GHz - 32 GHz processors now) possible.

In fact, Intel's marketers probably had to be given a heavy dose of reality from even their own engineers; as for instance there are limits to how far the die size can be shrunk (Si atoms have a definitive size); and increasing heat generation doesn't just disappear. Intel went with the market in the end; and even here places like Sandia are looking at alternatives to the current day computer, for the very reason of these self same limits Intel couldn't beat.

_________________

. |

|

| Back to top |

|

|

JerWA

Prince

Joined: 01 Jan 2007

Posts: 1497

Location: WA, USA

|

Posted: Thu Sep 24, 2009 10:59 am Post subject: Posted: Thu Sep 24, 2009 10:59 am Post subject: |

|

|

Itanium hasn't been abandoned btw, they're still made it's just only in use in servers.

The dies are still shrinking, Intel just announced their first 22nm manufacturing for instance.

As for heat, you generate less with a smaller die. One of the reasons why the new i7 860 had a 95 watt thermal rating, but competes head to head with the 125 watt i7 920 in terms of processing performance. Same reason the new HD5870 graphics card (using a new 40nm process) stomps it's predecessor (HD4890) but uses only 5 watts more power.

The articles you linked are 9 and 8 years old lol. A LOT has changed.

_________________

Stats: [BOINC Synergy] - [Free-DC] - [MundayWeb] - [Netsoft] - [All Project Stats] |

|

| Back to top |

|

|

Nuadormrac

Prince

Joined: 13 Sep 2009

Posts: 506

|

Posted: Thu Sep 24, 2009 4:57 pm Post subject: Posted: Thu Sep 24, 2009 4:57 pm Post subject: |

|

|

Actually, the reason I linked that was basically a "well there's many levels one could look at processing on either". The technology which went into much of it though, (and the EV8 never really did come out) did get adopted by both AMD and Intel. The hypertransport bus of the original Athlons was a cost reduced implementation of the EV6 bus (the alpha 21264 used a 128-bit memory bus implementation, AMD used the EV6 but 64-bits wide). The integrated memory controller which went into the Athlon 64s, was a change made to the EV7 processor, etc. It shouldn't be a surprise however; as AMD and Intel got the former DEC engineers, after DEC fell into financial problems. As such their engineering know how was absorbed by AMD and Intel, in much the same way as happened when nVidia acquired 3dfx after 3dfx's bankruptcy, etc.

For all intents and purposes, Intel's plans with Itanium did get abandoned. Remember initially they did not want to go x86-64. However when AMD's processors sold, their hand sorta got forced. And as to server markets, that's not exactly cut and dry, as many have gone the x86-64 route. Others simply hadn't bought into it (aka Alpha had disappeared, but the PPC, SPARC, and the like remain).

The dies are not shrinking anywhere near what they were. .09 micron was announced how long ago? And as to continued advances in that regard? The pace has surely slowed; as was also expected. The problem is several fold, but at the end of it, there's a definitive problem that an atom is of a said given size.

http://en.wikipedia.org/wiki/Silicon

places the size of the atomic nucleas at 111 pm, and the "Van der Waals radius" at 210 pm. 22 nm would be 2,200 pm. One can not go below the stated size for the atom without hitting the subatomic, aka the quantum, and at that point one's hit the point where classical computing theory would have to give way to quantum computing theory (the subatomic). One way to get around this, would be to exchange C atoms for Si atoms as the semiconductor of choice. They can both be used as semiconductors, and with the same electron configurations they also share similar chemical properties, etc. However that isn't the only problem encountered, hence why the move from .13 micron to .09 micron manufacturing wasn't as unproblematic as the move from .19 to .13 etc...

http://hothardware.com/Articles/AMDs-09-micron-A64-3500-Overclocking-Thermals--Power-Consumption/

.13 also posed difficulties which at .19 micron weren't seen, for instance woes that nVidia, ATI, and others were seeing at the time. This hasn't all proven to be a smooth transition; and the above certainly would have given Intel reason to 1. abandon the Pentium 4 design in favor of AMD's path with an x86-64 and to go multi-core, as they had done with core duo, quad core, etc. 2. it would give reason why Intel who always in their competitive war with AMD, who used to argue "clock is everything" from a marketing standpoint, would have abandoned that approach, and proceded to release more efficient (and higher performing processors) with a lower internal clock (as AMD had been doing).

As to Itanium, the market wasn't buying it up; and there's the fact that a continued doubling of clock rate and chip complexity every 18 months (Moore's law) isn't feasible anymore. If it were, we would have seen Intel double their 4 GHz Pentium 4 18 months after it's release, and double an 8 GHz processor 18 months after it's release, etc. The clock rate increases we were seeing in the past weren't as sustainable (heat generation being a prime reason why), and why the Pentium 4 was so quick to be replaced by the core duo and latter (based on what was previously refered to internally as "Yamhill" or something to the effect as memory serves.

Incidently, I am aware about the reasons for a die shrinkage which is why I brought it up in connection with heat generation, though it isn't the only one (moving from Al interconnects to Cu ones for instance, and there was look at methods of cooling itself... And in this regard, we can also look at generation to generation from the 486 and earlier which could run with a heatsink but no fan, to the Pentium (P5) which needed a fan, to the jokes about the Pentium Pro and Pentium II Intel space heaters, and on up. The Pentium II, all joking aside 10 years ago pales in comparison to the heat generated from today's processor; which is also a combination both increased clock rates and increased chip complexity, aka transitor count. It was in the midst of all this, Intel shyed away from their advertizing "our processor is better because of higher clocks", a move which was easier for customers to understand, and toward their current product strategy.

Fred brought up another issue in reference to this; where he mentioned a downside to constantly decreasing die size; and that is in reference to the number of electrons in a given transitor itself. To understand this, one has to go away from the idea given at a basic level in computer theory of the binary bits being "on" or "off", and look at the 1 and 0 relating to relative voltage states in a circuit that is really on the whole time, as it's powered up.

The significance is this. Electrons don't exactly "like" remaining in the excited energy state longer then they have to. They have a tendency to try to disipate this excess energy (in the form of heat for instance, aka where it's comming from), in order to drop down to a lower energy state. Against this, one's applying a voltage. But in point of fact, all electrons are not existing in either one energy state or the other at a given time. Rather one is energizing some electrons, others are dissipating some of their energy as heat, etc. So one discriminates whether the transitor is in a "1 state" or a "0 state". So lets say for instance a circuit has 1,000 electrons associated to it, if 900 are in the higher voltage state and 100 are in the lower voltage state, it's safe to assume it's a 1. If 900 are in the low voltage state, one can assume a 0; but what if 500 are in the high voltage state, and 500 in the low voltage state. Which is it? One has an indeterminate state, you can't tell... In point of fact, the liklihood it should be a 1 or a 0 is represented by 2 bell curves, based on the probility of how many of the electrons involved will be in either energy state, and closer to the middle there is some overlap in which bit it actually is.

Now the deal is this, as one continues to shrink the die size (fewer Si atoms make up the given circuit), there are fewer and fewer electrons available from which to determine if it's a 1 or a 0. The chance to encounter an indeterminate state, or to see bit errors can increase.... If one went to say 1 Si atom, with 14 electrons (there's 14 protons in it's nucleas), then the liklihood of this happening would be much greater then, if well...

At the time problems with the manufacturing processes were comming in the tech news, I was attending college at UNM. Many of the teachers there also have 2nd jobs (or should I say their teaching jobs are their 2nd jobs) at Sandia National Laboritories.

http://www.sandia.gov/

Sandia actually wasn't far from where I lived. I also got some mention to some (though obviously not all; as their work extended into the realm of classified) of what they were doing. One thing which was mentioned is exactly this limit; and how the current manufacturing process is considered to be nearing EoL (end of life). For this reason, they were looking at replacements to the computer based on silicon based semiconductor technology. One of the replacements they were (likely still are) looking at is the optical computer, a second would be a quantum computer, and there was mention of a third (though the professor/Sandia worker wasn't sure off the top of his head or from memory what the third was. That limits are being reached was apperent to them, for which research was being conducted with the goal in mind of creating a replacement technology before things reached that point.

Now Sandia is known for it's research into the realm of the nuclear (and they do have access to the countries weapons grade uranium, nuclear program, etc) for which a security clearance is required to even get an interview to work there (I had looked). However nuclear technology isn't the only thing they look into, and computer technology is another area for which they do R&D for the US DoD.

_________________

. |

|

| Back to top |

|

|

JerWA

Prince

Joined: 01 Jan 2007

Posts: 1497

Location: WA, USA

|

Posted: Thu Sep 24, 2009 10:06 pm Post subject: Posted: Thu Sep 24, 2009 10:06 pm Post subject: |

|

|

Uh, ok? My point was that you were linking almost a decade old documentation, like that's still relevant.

Itaniums: I never once heard them in reference to the desktop. Ever. They started in the Xeon server market and I never saw them trying to move out of it. For that matter I only ever heard of the DEC Alphas in the desktop space a handful of times before those went away. They IA64s are still being sold, still being developed, which hardly sounds like abandonment considering it's been almost a decade? The problem with RISC stuff is you have to program for it, and there's no market for another platform in the end user space, especially now. Success? Not hardly. But they ARE still around all this time later.

As for the server market being x86-64, that depends who you talk to. I'm not talking about the cheap little 1U Dell servers you fill a rack with that are sissier than my desktop. Look in the big iron space, x86-64 is almost unheard of there. But there are IA64s. Is IA64 mainstream? Nope. It's a niche market, I never implied otherwise.

As for manufacturing you yourself said it... the limitation you're talking about would require them to shrink their process 100 fold before encountering it. I hardly doubt it's even on their list of concerns right now, we'll be off silicone long before they shrink to a .2 process. As for electrons and all the rest of that, oky doky. I'm sure the fact they're lowering voltages, using gates that actually work, decreasing electrical distance and such aren't playing into working around all these limitations or anything.

RE: Changes in process and timelines, look at the last 10 years. How long did it take to go from each? It's surely accelerating, not slowing down. We've already gone from 90 to 65 to 45 to 32 to 22 in the last 5 years alone.

Moore's law says nothing about speed, only transistor count. And it's still alive and well. Part of Intels 22nm process announcement mentions Moore's law specifically, and that it will allow them to continue the exponential growth that it refers to. And, more generally/forward looking, they've already got processes that will allow them to make the next step when they're ready.

Processor speed is a misnomer anyways. It's never been an accurate way to gauge performance because it's too simple a metric. Want to know why they aren't doubling speeds? It's easier to get the performance other ways. For the same reason they didn't go from 100nm or whatever straight to 22nm. No need, no market, no profit. Sell them system a today, and system a.5 tomorrow. Jumping straight to system K isn't profitable unless you can keep your competitors out of your labs, and the anti-trust courts out of your pocketbook.

_________________

Stats: [BOINC Synergy] - [Free-DC] - [MundayWeb] - [Netsoft] - [All Project Stats] |

|

| Back to top |

|

|

Nuadormrac

Prince

Joined: 13 Sep 2009

Posts: 506

|

Posted: Thu Sep 24, 2009 10:33 pm Post subject: Posted: Thu Sep 24, 2009 10:33 pm Post subject: |

|

|

Alphas were used in the server market, as well as for high end workstations. People I knew who designed both Intel, Alpha, and SPARC systems had many clients, including UNMH among others who used it to hold their databases. The Alpha machines built for the university hospital cost $100,000 when used on such purposes. A fact Carol mentioned to me, when she also mentioned that the university which asked them to waive the deposit on the machines; was seriously delinquent on paying their account. And when they'd call to get an accounting, they'd just be transfered from dept to dept with no one taking responsibility (standard beuracracy).

Again, on the server market; the Itanium hasn't been the sure bet; as many stayed with other RISC platforms and others are using x86-64 for server applications. For instance, in one contract:

http://www.amdboard.com/hn10210201.html

The market doesn't always go as some marketers/PR people might plan... And BTW, I wasn't talking the print server either... There have been arguments made against the IA-64 ISA, including some which were made by some of DEC's former engineers as this stuff was being hashed out, some of which were picked up by Intel, some of which were picked up by AMD. Many servers also still chose to run on AIX using neither proc platform also... On another front, OS X has itself looked to be not completely unviable for a server OS, given that it's essentially BSD, with Apple's UI on the front end.

Actually the limitation I mentioned (along with problems they're running into) have been on people's minds. With some foreward thinking, and predictions which didn't put the limitation decades into the future. Again, there were some marketing changes which were made around this time, and also research which has been ongoing in light of it all. There was a slowing due to problems which were encountered hitting new die shrinkage levels. TSMC and UMC were definitely running into problems with their .13 micron manufacturing process which effected clients such as nVidia, and .09 hit Intel among others. It was also around these times that in the end the P4 got scrapped, and Intel ended up picking up the x86-64 with core dual and the like.

For Intel it was actually a wise move. The Pentium 4 was arguably a bad design; which also got rushed to market (leaving out some things initially planned for it, until Northwood's release). This left the initial Pentium 4 2.5 GHz underperforming the 1 GHz Pentium III on benchmark after benchmark. Websites such as Tom's Hardware Guide, Anandtech, and the like were not kind in the analysis after running benchmarks. It also spelled the end to the clock rate is everything myth; as no longer did people have to take AMD's word, they could see it when they upgraded their PIII to the brand spanking new P4. Northwood made up some of this; but still... And to have continued to accelerate clock rate increases sufficiently enough. The Athlon had an advantage over the PIII because it was the new architecture. The P6 (starting with the Pentium Pro) was by then an old arch that had already advanced a fair degree having already grown a fair (and respectable) degree by then, competing against a platform with a lot of room for growth.

When core duo and the like came around, it came in as the new comer against the then older Athlon 64 (as well as x2) platform, and had it's own headway.... It also included some improvements; but at the same time represented a scraping of an arch, and adopting AMD's approach in moving the product line forward.

Actually Moore's law has been mentioned wrt both. But as to why they aren't rapidly increasing clock rate, I mentioned it above. Thing is (and for the record I never said clock rate is a good way to gauge processor performance); however, this is the metric Intel's marketers, as opposed to their engineers, wanted to use in their marketing strategies. Main reason, they could sell this down to the consumer more easily, without the consumer having to know much. AMD had the harder job, trying to explain their "slower" processor was actually more efficient, so not exactly the under-performer it got the reputation for (in part due to such marketing strategies). Until the Athlon (which could finally beat the PIII to the 1 GHz mark largely due to being the new arch on the block which introed much latter on, with a higher starting clock and a lot of room for growth still); AMD had always been held as the "cheap" alternative, or the lower end to the market. Well since after AMD and Intel broke off their partnership which existed from the days where IBM went with the 8088 for their PC, and demanded multiple sources of supply.

But in the end, Intel was left, after all the marketing gimickry, of having to explain why their P4 2.5 GHz couldn't out-perform the 1 GHz PIII (a certain eating of crow, as it validated their competitor's argument, and not in comparing their competitor's product to their own). And then after this, the problems I highlighted started to manifest. Whether the marketing dept or PR people liked it in terms of their PR pitch; I could very well see Intel's engineers in the end sensing a certain degree of prudence in giving them a heavy dose of reality around the time this went down.

_________________

. |

|

| Back to top |

|

|

JerWA

Prince

Joined: 01 Jan 2007

Posts: 1497

Location: WA, USA

|

Posted: Fri Sep 25, 2009 1:00 am Post subject: Posted: Fri Sep 25, 2009 1:00 am Post subject: |

|

|

You know that x86-64 is just an instruction set right? The reason it's the most prevalent in servers is the same reason it's the most prevalent in desktops; market share. Nobody wants to write for multiple architectures. Even Mac is no longer a unique architecture.

I'm not sure what you mean by print server. As for AIX, that's what I meant. The x86-64 market share is primarily "small" servers. Stuff that's just desktop level equipment in a form factor that makes it easier to manage (and, in theory, with enterprise level parts for better MTBF). I meant the big iron stuff, 128 way and up platforms especially. The x86-64 stuff is just now, bleeding edge, creeping into the 128 way space, while the Itaniums have been in it for a long time, and the specialized stuff like "supercomputers" have been in it for what, decades?

As for the x86-64 stuff AMD vs Intel, it's all very very old news at this point. Nobody cares any more. It's all just AMD64. That's why I questioned you bringing it up in the first place. Sure, actually making any USE of it is relatively new, but the instruction set and capability itself has been around in both camps for like 5 years now. In computer terms, ages. Nobody is changing that, at least not that I've heard. Except, of course, that x86 is finally going to bite the dust in the next few years.

In regards to clock speed, that was my point. It's never been a good metric. Even as far back as the VIC 20 and 8086 the #'s were misleading unless you were comparing chips from the same company. As for all the marketing stuff, I wouldn't know, or care. I'm not your average consumer. And I don't know what place the marketing spiel has in a discussion about the technology. I remember the DEC guys clocking a 600mhz Alpha all the way up over 1 GHz (maybe by a significant margin, it's been a long time) using liquid nitrogen. Nobody cared except us SETI nerds who were all drooling at how fast it could crunch a WU lol.

In modern terms, there are X2 chips running over 6 GHz (maybe 7 by now) with extreme cooling. Think about it historically. That's a chip with thousands of times the transistors of the P4, running way over it's clock, doing more PER clock ta boot. There's plenty of headroom left in all of this stuff, none of it is pushing the hardware envelope yet. Even on the cooling front, a space I wouldn't normally think there could be revolutionary changes, there are 2 coming down the pipeline in the next few years. One is the materials shift away from copper and aluminum because they've found much better ways, and forms, to get the job done. The second is microchannel cooling, or more specifically boiling within microchannels, because they surprised themselves by discovering it's exponentially more effective thermally than just heating the fluid like in a normal heat pipe. Combined with the fact that better manufacturing (i.e. from 45 to 32 to 22 to 12) brings with it lower power levels to accomplish the same goals, which means less heat for the same processing power, which means you're free to clock up or add complexity (Intel is doing both right now).

As to the pace of things... 3000nm 1979, 1500nm 1982, 1000nm 1985, 800nm 1989, 600nm 1994, 350nm 1995, 250nm 1998, 180nm 1999, 130nm 2001, 90nm 2003, 65nm 2006, 45nm 2007, 32nm 2009 (planned), 22nm 2011 (planned), 16nm they hit the wall with our current technology.

Thing is, if you had told them in 2001 that we'd be running 100x the processor through the same voltage with a million times the transistors on a 32nm process they'd tell you no way. Not because it would be sci-fi, in fact most of this stuff was functional in a lab waaaaay before it hit market, but because the cost would be inconceivable. Hell, Toshiba started demonstrating bits and pieces of processes required for 16nm manufacturing... in 2005 (sadly they haven't gotten much closer since then). My point is that the limitations we see today won't exist when it matters. Hell, they weren't expecting 16nm manufacturing until 2018 and by then I'd be surprised if we're still on anything resembling binary computers with their silly x64 instruction sets and limitations.

Moore's law has been applied to a lot of things, but specifically only deals with transistor counts at cost I believe. Again, what the marketing people apply it to is of no interest to me.

At any rate, we've wandered far afield.

_________________

Stats: [BOINC Synergy] - [Free-DC] - [MundayWeb] - [Netsoft] - [All Project Stats] |

|

| Back to top |

|

|

Nuadormrac

Prince

Joined: 13 Sep 2009

Posts: 506

|

Posted: Fri Sep 25, 2009 7:13 am Post subject: Posted: Fri Sep 25, 2009 7:13 am Post subject: |

|

|

| JerWA wrote: | | You know that x86-64 is just an instruction set right? |

It is also the processor which uses that ISA. Same as one can talk about x86 both in terms of the ISA, as well as the processor family which uses the ISA.

| Quote: | | The reason it's the most prevalent in servers is the same reason it's the most prevalent in desktops; market share. |

And in part, that was my point. The market had chosen, and which direction they chose; well when the day is done no degree of marketing can reverse the decisions of the customers. They can try to sell, try to appeal, try to advertize in ways to "convince" people to buy a certain way, but in the end can't control how people will spend those $.

| Quote: | | Nobody wants to write for multiple architectures. Even Mac is no longer a unique architecture. |

OS-X being ported to the x86 is nothing new to me, and hasn't been for some time.

| Quote: | | I'm not sure what you mean by print server. |

It doesn't take much of a server to run a print server (the print queue basically for an entire group, such as a single floor, net block, or however one divides up the printing resources for a large corporate building where people don't want to have to walk to 1 central location.

| Quote: | | As for AIX, that's what I meant. The x86-64 market share is primarily "small" servers. |

Not in it's entirety. Look at the super computer which Sandia was having built for them through a bid contract.... That was no desktop level machine.

I never was talking soley the lower end of the server market; though it might have been interpreted is if I was. Thing is, the market really didn't go for IA-64 in many sectors. Many had gone more the x86-64 route, and you said it yourself in part; people don't want to have to support yet another arch, and one that wasn't without question. DEC's engineers had serious reservations about the suggested requirements for compiler optimizations IA-64 would require, and even refered to it as being based on a failed experiment (in assumptions) to stuff they looked at before, and discared. DEC's engineers weren't kind in the comparison, as they wripped it to shreds, then were planing a generation change to not just compete against it, but obliterate it in their estimation. That product didn't come to light (for a variety of business/market reasons); but those same engineers took the know how that went into it, with them when they came under the employ of AMD as well as Intel.

It also aught to go without saying, that their having ideas which were considered good ideas as a design team; would have been seen as such, for lawsuites which in those days pended against other proc makers for having infringed upon their patents.... If something good wasn't seen, people wouldn't have gone to the trouble/risk of "stealing" their ideas.

| Quote: | | As for the x86-64 stuff AMD vs Intel, it's all very very old news at this point. Nobody cares any more. It's all just AMD64. That's why I questioned you bringing it up in the first place. |

Initially I hadn't. Initially I mentioned that either on a CPU or GPU things could get a bit more involved, and showed an example where it could. Conversations obviously do evolve. And yes, in the end it was settled, as Intel went the way the market was clear it wanted to go. They had a backup plan from their initial plans of leaving x86 as 32-bit and never going to a 64-bit x86 proc (what from their own PR statements they wanted to do); with Yamhill, but were hoping they'd not have to go that route. The Athlon64 sold too well for them to do that, and the P4 just couldn't, well...

| Quote: | | Sure, actually making any USE of it is relatively new, but the instruction set and capability itself has been around in both camps for like 5 years now. In computer terms, ages. Nobody is changing that, at least not that I've heard. Except, of course, that x86 is finally going to bite the dust in the next few years. |

Perhaps, but time will tell. I seriously doubt IA-64 would come as it's replacement, just as it hadn't so far.

| Quote: | | In regards to clock speed, that was my point. It's never been a good metric. Even as far back as the VIC 20 and 8086 the #'s were misleading unless you were comparing chips from the same company. |

Which is also why the partnership ended, and AMD and Intel became competitors (with Motorola being kicked outa the partnership). They weren't exactly happy about certain things.

| Quote: | | As for all the marketing stuff, I wouldn't know, or care. I'm not your average consumer. And I don't know what place the marketing spiel has in a discussion about the technology. |

There's a point/counter point in terms of what happened, and how things moved. Intel couldn't continue to sustain the rapid increases in clock, and well; the rest was history, and their own marketers had to change their toon.

| Quote: | | In modern terms, there are X2 chips running over 6 GHz (maybe 7 by now) with extreme cooling. |

The problem here is it's requiring extreme cooling; which isn't itself new in terms of the clock rate increases people could get off old procs in the past when Kryotech was used. Comparing an old proc using a fan/sink to a new one using extreme cooling wouldn't be an apples to apples comparison. One would need to use stock cooling in both cases, or something like Kryotech in both cases to keep the comparisons even. Extreme cooling isn't representative of what would happen when it's operated with standard cooling, in a room at standard room temperature. And on standard cooling, this envelope is nearing it's end.

| Quote: | | One is the materials shift away from copper and aluminum because they've found much better ways, and forms, to get the job done. The second is microchannel cooling, or more specifically boiling within microchannels, because they surprised themselves by discovering it's exponentially more effective thermally than just heating the fluid like in a normal heat pipe. |

The material changes have been going on for a time. Though each of these things taken together you're looking at are attempts at extending the current technology base, as a suitable replacement hasn't been fully designed yet. Quantum computers are still relatively small, and the technology isn't yet advanced to the point where one could have a large scale computer, using it, running in one's server closet.

The fact that so much research is being put into trying to find ways around the heat problem however, exists precisely because there is one; and there's an attempt to buy time as a suitable replacement technology base is also being sorted out. Thing is, whatever the case might be with problems being encountered, people really aren't going to want to replace an entire PC to get one that barely out-performs their current system, and for the $ spent, kind of expect (rather then just want) some more significant improvements over the older system. Then there's the grandma/grandpa who probably doesn't pay as much attention (beyond how fast their cards in cribbage get dealt  ) but who also aren't a prime candidate for frequent hardware replacements either. No way, for instance would I have replaced a Pentium II 266 with a Pentium II 333, as the cost of a new system wouldn't warrant such a small gain in performance, etc. ) but who also aren't a prime candidate for frequent hardware replacements either. No way, for instance would I have replaced a Pentium II 266 with a Pentium II 333, as the cost of a new system wouldn't warrant such a small gain in performance, etc.

| Quote: | | combined with the fact that better manufacturing (i.e. from 45 to 32 to 22 to 12) brings with it lower power levels to accomplish the same goals, which means less heat for the same processing power, which means you're free to clock up or add complexity (Intel is doing both right now). |

My point was that this is slowing down in terms of lowering the micron manufacturing process, pricesly because problems have been getting hit, as they get closer to well. They also have not been pushing clock, as they had to back off. Look at the clock on a Pentium 4 in it's final generation.

http://en.wikipedia.org/wiki/Pentium_4

| Quote: | | The fastest mass-produced Prescott-based processor was clocked at 3.8 GHz. |

| Quote: | | Finally, the heat problems were so severe, Intel decided to abandon the NetBurst microarchitecture altogether, and attempts to introduce a 4 GHz processor were abandoned, as a waste of internal resources. Intel realized that it would be wiser to head towards a "wider" CPU architecture with a lower clock speed to keep heat levels down while still increasing the throughput of the CPU. |

Now lets compare that to day's clock speed.

http://en.wikipedia.org/wiki/Intel_Core_i7

| Quote: | | Max. CPU clock 2.66 GHz to 3.33 GHz |

3.33 GHz on a core i7 !> 3.8 GHz on a Pentium 4. This in itself is what I was pointing out. The Pentium 4s were seeing higher clock rates, years ago, then the processors comming out today. Sure if one wants to compare an x86-64 of today, to the original Athlon 64s, the clock went up; hell my A64 3500+ runs at 2.1 GHz clock, but it was not the highest clock rates achieved using stock cooling (aka the manufacturer set clock, using standard clock, with the amount of timing margin which was established).

| Quote: | | My point is that the limitations we see today won't exist when it matters. Hell, they weren't expecting 16nm manufacturing until 2018 and by then I'd be surprised if we're still on anything resembling binary computers with their silly x64 instruction sets and limitations. |

Well the limitations have been mattering, hitherto which is why the drastic steps which have been taken, and the attempts to extend the silicon based semiconductor technology base. Peeps such as those I mentioned knew that we were running into this, and also knew that a replacement wasn't going to be here in time (replacements they themselves were working on trying to sort out the necessary science/engineering to make it possible). But they also knew that with the limits closing in, and a replacement technology still a ways off, they'd have to also work on ways to extend the current technology base, given customer expectations for more raw power and performance wouldn't diminish over time. Some people were back then also arguing in favor of multi-processing even at the desktop level, and doing stuff in parallel; back when it was kinda unheard of for standard use. The argument was the same, they will need to make this change before the end, if they're going to continue to meet expectation for generational type performance gains.

That there will be a replacement to the current technology base, we are in agreement. It's a matter of how long it will take to get sorted out to a degree that commercial application will be feasible. For instance, with Quantum computers, they still do have a certain grappling with the complexity involved, when the size of the thing scales large enough for commercial/industrial use. In the meantime, there's attempts at extending the current technology base, until this can happen, and happen full force...

_________________

. |

|

| Back to top |

|

|

JerWA

Prince

Joined: 01 Jan 2007

Posts: 1497

Location: WA, USA

|

Posted: Fri Sep 25, 2009 10:24 am Post subject: Posted: Fri Sep 25, 2009 10:24 am Post subject: |

|

|

If you want to look at clocks look at capability, not stock limitations. I can't help that Intel uses horrible coolers.

That same i7 920 is regularly clocked to 3.6-3.8 GHz on air, and 4.0 GHz on water.

Look at rev 2 of that family, the i7 750/860/870, and you'll see that they overclock themselves automatically over 600 mhz even with the crappy stock cooler, and despite being just released there are plenty of sites giving you exact settings to get the 750 up to 3.8-4GHz already, same with the 860. Their limitations, compared to the 1366 chips, is the onboard components requiring so much voltage since they moved the pcie controller onboard.

The supercooling I mention also brings "super" results. Here's a video (already outdated) showing some 6GHz and 6.5 GHz tests. The heat issue actually gets easier to address as your manufacturing gets better, not harder, because you're producing less of it. Again, my first example the i7 920 vs the i7 860. They benchmark almost identical, they overclock almost identical, they have a very similar feature set (920 has more controllers and pathways, 860 has pcie onboard) but even in that one rev change they've dropped from a 130W thermal to 95W.

So again, these new chips, despite being exponentially more complex, ARE capable of clocking as high as that crappy P4, without any serious effort, and with 4 cores ta boot. For the average consumer who doesn't know? Of course not, but again, what do I care about those people? They're the same idiots the marketing departments are trying to sell to.  I disagree when you say we're approaching some final limitation on this front, as every chip still continues to be better, and when they hit the heat envelope they work on efficiency instead of complexity. They've been doing that cycle a long time. I disagree when you say we're approaching some final limitation on this front, as every chip still continues to be better, and when they hit the heat envelope they work on efficiency instead of complexity. They've been doing that cycle a long time.

Here's some of the materials stuff I'm talking about:

http://frostytech.com/articleview.cfm?articleID=2424

Even that's already out of date, as I mentioned before they just this week made a discovery regarding boiling coolant in microchannels instead of just heating it. It's cool stuff. I don't think we're anywhere near the limits of heat dispersion yet, let alone the limit of optimization on the chip itself.

As for IA64 replacing x86, no way. Like I said, it's a dead issue. I happen to work with prototypes from both sides, stuff that's typically near-market (6-12 months), in terms of the OS, and I've not heard a whisper of any nefarious plan by Intel to change instruction sets. At least not anything drastic, they're just playing with SSE stuff now, and trying to get away from BIOS. But if they were planning an architecture shift I'd know, because my employer would have to play along or it'd be dead before it ever went on sale.

RE: Sandia, I'm talking market share, not one-offs. The DEC Alphas in the desktop space were essentially one-offs too, that doesn't mean that RISC on the desktop was ever a player. In the server space IA64 has a niche, it's been there a long time, it doesn't appear to be going away despite lots of peoples attempts to. But I've seen first hand proof that development is still alive and well on the IA64 front. <shrug> But again, no desktop stuff, not even a whiff.

_________________

Stats: [BOINC Synergy] - [Free-DC] - [MundayWeb] - [Netsoft] - [All Project Stats] |

|

| Back to top |

|

|

Adam Alexander

Prince

Joined: 25 Oct 2008

Posts: 1626

Location: The looNItic fringe

|

Posted: Fri Sep 25, 2009 10:42 am Post subject: Posted: Fri Sep 25, 2009 10:42 am Post subject: |

|

|

JerWA,

I had no luck getting this to run on my Win 7 box. I used the Cat 9.9 drivers and the first WU errored out. Then I checked the project boards and saw a thread about installing a run time. Did that, downloaded 90 odd WU's and every last one of them errored out on me. HELP! Right now that box is back to shrubbing SETI with an 8800 GT.

_________________

Currently running:

Active

Intel Core 2 Quad 9550

Reserves

Now down to one Intel Core 2 Quad Q6700 that's in a tiny case and overheats if I actually try to use all four cores at the same time. On the other hand, it makes for a very nice home theater PC

Intel Core i7 CPU 920

AMD Athlon(tm) 7850 |

|

| Back to top |

|

|

JerWA

Prince

Joined: 01 Jan 2007

Posts: 1497

Location: WA, USA

|

Posted: Fri Sep 25, 2009 11:23 am Post subject: Posted: Fri Sep 25, 2009 11:23 am Post subject: |

|

|

Adam,

You have to copy 3 DLLs and rename them before it will work (this is caused by the fact they're using an old CAL version that's hard coded to look for the 3 files with the old name).

1. Open command prompt as Administrator

2. execute "cd %systemroot%\system32"

3. execute "copy atical*.dll amdcal*.dll"

What you're looking to end up with is these files: amdcalrt64.dll,amdcalcl64.dll,amdcaldd64.dll,aticalrt64.dll,

aticalcl64.dll,aticaldd64.dll

The Catalyst 9.2 and newer drivers use ATI in the name, but CAL is looking for AMD (which they were named prior to 9.2). If the above commands don't work, you can do it by hand in the explorer by finding the three ATICAL files (aticaldd64 aticalrt64 aticalcl64) and copying them (do not rename the originals), then rename the copies to be AMDCAL (amdcaldd64 amdcalrt64 amdcalcl64).

_________________

Stats: [BOINC Synergy] - [Free-DC] - [MundayWeb] - [Netsoft] - [All Project Stats] |

|

| Back to top |

|

|

Adam Alexander

Prince

Joined: 25 Oct 2008

Posts: 1626

Location: The looNItic fringe

|

Posted: Fri Sep 25, 2009 11:28 am Post subject: Posted: Fri Sep 25, 2009 11:28 am Post subject: |

|

|

| JerWA wrote: | Adam,

You have to copy 3 DLLs and rename them before it will work (this is caused by the fact they're using an old CAL version that's hard coded to look for the 3 files with the old name).

1. Open command prompt as Administrator

2. execute "cd %systemroot%\system32"

3. execute "copy atical*.dll amdcal*.dll"

What you're looking to end up with is these files: amdcalrt64.dll,amdcalcl64.dll,amdcaldd64.dll,aticalrt64.dll,

aticalcl64.dll,aticaldd64.dll

The Catalyst 9.2 and newer drivers use ATI in the name, but CAL is looking for AMD (which they were named prior to 9.2). If the above commands don't work, you can do it by hand in the explorer by finding the three ATICAL files (aticaldd64 aticalrt64 aticalcl64) and copying them (do not rename the originals), then rename the copies to be AMDCAL (amdcaldd64 amdcalrt64 amdcalcl64). |

Thanks. I guess I misread the "How to make ATI cards work"instructions on the Collatz message board. I'll let you know how it goes.

_________________

Currently running:

Active

Intel Core 2 Quad 9550

Reserves

Now down to one Intel Core 2 Quad Q6700 that's in a tiny case and overheats if I actually try to use all four cores at the same time. On the other hand, it makes for a very nice home theater PC

Intel Core i7 CPU 920

AMD Athlon(tm) 7850 |

|

| Back to top |

|

|

|

|

You cannot post new topics in this forum

You cannot reply to topics in this forum

You cannot edit your posts in this forum

You cannot delete your posts in this forum

You cannot vote in polls in this forum

|

|

4870 GPU

4870 GPU